Crack the Code: XGBoost Hyperparameter Tuning Demystified

XGBoost (Extreme Gradient Boosting) is one of the most powerful machine learning algorithms, widely used in competitions and industry applications. However, its performance heavily depends on selecting the right hyperparameters.

Grid Search (Brute-Force) is the most straightforward method for hyperparameter tuning, systematically testing every possible combination of parameters to find the optimal configuration. While computationally expensive, it guarantees finding the best combination within your defined search space.

Example:

Suppose you want to tune two hyperparameters for an XGBoost model:

max_depth: [3, 5]learning_rate: [0.1, 0.01]

Grid Search will try all combinations:

- max_depth=3, learning_rate=0.1

- max_depth=3, learning_rate=0.01

- max_depth=5, learning_rate=0.1

- max_depth=5, learning_rate=0.01

Each of these combinations will be tested, and the one with the best performance (e.g., accuracy or RMSE) is selected as the best.

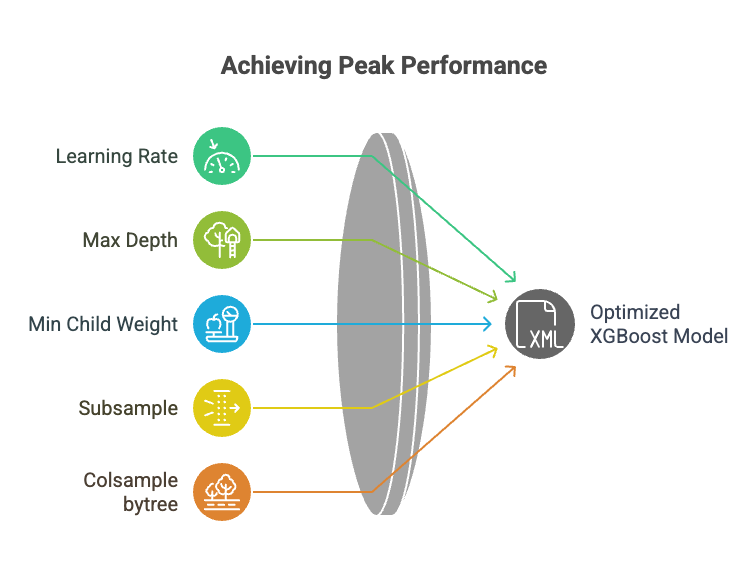

Key XGBoost Hyperparameters to Tune

These are the most impactful hyperparameters for XGBoost performance:

1. Core Parameters

n_estimators: Number of boosting rounds (trees)learning_rate(eta): Step size shrinkage to prevent overfittingmax_depth: Maximum tree depthmin_child_weight: Minimum sum of instance weight needed in a child

2. Regularization Parameters

gamma: Minimum loss reduction required to make a splitsubsample: Fraction of samples used per treecolsample_bytree: Fraction of features used per treelambda(L2 regularization) andalpha(L1 regularization)

3. Learning Task Parameters

objective: Learning objective (e.g.,binary:logisticfor classification)eval_metric: Evaluation metric for validation data

How Grid Search Works for XGBoost

Step-by-Step Process

- Define a parameter grid with possible values for each hyperparameter

- Train and evaluate an XGBoost model for every possible combination

- Use cross-validation to assess performance robustly

- Select the best model based on your evaluation metric

Example Parameter Grid

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [3, 5, 7],

'learning_rate': [0.01, 0.1, 0.2],

'subsample': [0.6, 0.8, 1.0],

'colsample_bytree': [0.6, 0.8, 1.0],

'gamma': [0, 0.1, 0.2]

}

Implementing Grid Search for XGBoost in Python

Complete Code Example

import xgboost as xgb

from sklearn.model_selection import GridSearchCV, train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_breast_cancer

import numpy as np

# Load dataset

data = load_breast_cancer()

X, y = data.data, data.target

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Define XGBoost model

model = xgb.XGBClassifier(objective='binary:logistic', random_state=42)

# Parameter grid

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [3, 5, 7],

'learning_rate': [0.01, 0.1, 0.2],

'subsample': [0.6, 0.8, 1.0],

'colsample_bytree': [0.6, 0.8, 1.0],

'gamma': [0, 0.1, 0.2]

}

# Grid Search with 5-fold CV

grid_search = GridSearchCV(

estimator=model,

param_grid=param_grid,

scoring='accuracy',

cv=5,

n_jobs=-1,

verbose=1

)

# Perform search

grid_search.fit(X_train, y_train)

# Results

print(f"Best parameters: {grid_search.best_params_}")

print(f"Best CV accuracy: {grid_search.best_score_:.4f}")

# Evaluate on test set

best_model = grid_search.best_estimator_

y_pred = best_model.predict(X_test)

print(f"Test accuracy: {accuracy_score(y_test, y_pred):.4f}")

🔍 What This Code Does:

- Loads the breast cancer dataset.

This is a built-in dataset with features about tumors, used to classify whether they're malignant or benign. - Splits the data into training (80%) and testing (20%) sets.

- Sets up an XGBoost classifier (a powerful machine learning model for classification tasks).

- Defines a grid of parameters to try:

n_estimators: Number of trees.max_depth: How deep each tree can go.learning_rate: How fast the model learns.subsample: How much data each tree sees.colsample_bytree: How many features each tree sees.gamma: How much regularization to apply.

- Uses GridSearchCV to try every combination of these parameters using 5-fold cross-validation (splitting the training data into 5 parts to test performance reliably).

- Finds and prints:

- The best combination of parameters.

- The best cross-validation accuracy from those combinations.

- Evaluates the best model on the test set and prints the final test accuracy.

🖨️ Example Output Explained:

Suppose the output is:

Fitting 5 folds for each of 729 candidates, totalling 3645 fits

Best parameters: {'colsample_bytree': 0.8, 'gamma': 0.1, 'learning_rate': 0.1, 'max_depth': 5, 'n_estimators': 100, 'subsample': 0.8}

Best CV accuracy: 0.9648

Test accuracy: 0.9561

What this means:

- ✅

GridSearchCVtested 729 combinations (all possible combinations of the values you gave). - 🏆 It found that the best combination of parameters is:

- 100 trees

- max depth of 5

- learning rate of 0.1

- using 80% of rows and 80% of columns for each tree

- gamma value of 0.1

- 📈 On average, across the 5 folds, this setup gave 96.48% accuracy.

- 🧪 On the test set (data the model hasn't seen before), the accuracy was 95.61% — very good!

Alternatives to Grid Search:

- Random Search: Samples random combinations (more efficient for large spaces)

- Bayesian Optimization: Uses probabilistic models to guide search

- Optuna/Hyperopt: Advanced frameworks for efficient hyperparameter tuning

Best Practices and Common Pitfalls

Do's

✔ Start with 2-3 most impactful parameters first

✔ Use logarithmic scales for parameters like learning rate

✔ Always include cross-validation

✔ Monitor computation time vs. performance gains

Don'ts

✖ Don't grid search all parameters simultaneously

✖ Avoid very fine-grained value spacing initially

✖ Don't ignore computational constraints

✖ Never tune on test data

Conclusion

Grid Search remains a valuable tool for XGBoost hyperparameter tuning, especially when:

- You have a small to medium parameter space

- Computational resources are available

- You need reproducible, exhaustive results

For larger parameter spaces, consider combining an initial Grid Search with more advanced methods like Bayesian Optimization.

By systematically exploring hyperparameter combinations, you can unlock XGBoost's full potential and build models with optimal performance.