[Day 17] Supervised Machine Learning Type 8 - Gradient Boosting Machines (GBM) (with a Small Python Project)

Failed your first test? Learn from it! That’s exactly how Gradient Boosting works. Discover how machines ace predictions just like you would! 💡📊

![[Day 17] Supervised Machine Learning Type 8 - Gradient Boosting Machines (GBM) (with a Small Python Project)](/content/images/size/w2000/2025/03/Gradient-Boosting-Machines-machine-learning-algo---visual-selection.svg)

Imagine you are preparing for a math exam. You take a mock test to see how well you perform.

- Your first score? 50/100.

- Not great, but now you know where you struggle!

Instead of giving up, you analyze your mistakes:

✅ You did well in geometry

❌ You struggled with algebra

What do you do? You don’t study everything from scratch again. Instead:

- You focus more on algebra, since that’s where you lost the most marks.

- You take another test, and your score improves to 70/100.

- You repeat this process until you consistently score 95+.

This step-by-step learning process is exactly how Gradient Boosting Machines (GBM) work in machine learning.

What is Gradient Boosting?

Gradient Boosting is a machine learning algorithm that improves predictions step by step by focusing on errors. It builds multiple weak models (small decision trees) and learns from mistakes over time.

How GBM Works (Step by Step)

Step 1: Initial Guess (First Tree)

- The model makes a rough prediction (like your first mock test score).

- Let’s say we are predicting house prices.

- The model predicts $300,000 for a house, but the actual price is $350,000.

- Error (Residual) = $350,000 - $300,000 = $50,000.

Step 2: Learn from Mistakes

- The model builds another small tree to predict the error ($50,000).

- Instead of fully correcting the mistake, it applies a small adjustment (controlled by the learning rate).

Step 3: Repeat Until Errors are Small

- Each new tree tries to correct the errors of the previous one.

- After many iterations, the final prediction becomes very accurate.

🎯 Final Result: A strong model that combines multiple weak models, just like taking multiple tests and improving each time.

Understand it with a simple video explanation:

🌍 Real-World Use Cases of GBM

GBM is used in various industries due to its high accuracy and ability to handle complex data.

1.Banking & Finance 🏦 – Credit Risk Scoring

📌 Problem: Banks need to decide whether to approve or reject a loan based on a customer's credit history.

🎯 GBM Solution:

- Predicts loan default risk by analyzing financial behavior.

- Uses customer data (credit score, income, debt-to-income ratio) to classify applicants as low-risk or high-risk borrowers.

- Many financial institutions, including JP Morgan, Wells Fargo, and Capital One, use GBM for fraud detection & credit scoring.

🔹 Why GBM? It handles noisy data and missing values well, making it ideal for real-world financial datasets.

2.Healthcare 🏥 – Disease Diagnosis & Prediction

📌 Problem: Doctors need to predict the likelihood of a patient having a disease.

🎯 GBM Solution:

- GBM models are used for early detection of diseases like cancer, diabetes, and heart disease.

- Trained on patient medical history, test results, and lifestyle factors to predict risk levels.

- Used in predictive healthcare systems at hospitals & medical research labs.

🔹 Example: IBM Watson Health uses GBM for predicting hospital readmission rates.

3.E-commerce & Retail 🛒 – Customer Churn Prediction

📌 Problem: Online businesses need to identify customers likely to stop purchasing.

🎯 GBM Solution:

- Predicts which customers are about to churn (stop using a service).

- Uses purchase history, website activity, customer service interactions to identify at-risk customers.

- Helps businesses like Amazon, Flipkart, and Shopify to proactively offer discounts or loyalty rewards.

🔹 Why GBM? It accurately captures complex customer behaviors, leading to better retention strategies.

💻 Python Mini Project – JPMorgan Fraud Detection

Now, let’s build a real-world JPMorgan fraud detection model using GBM.

🔹 Dataset Overview

We simulate a dataset of 20+ credit card transactions. The dataset contains:

- Transaction Amount

- Merchant Category

- Time of Transaction

- Transaction Location

- Previous Fraud History

- Fraudulent Transaction (Target: 0 = No, 1 = Yes)

🔹 Save this table as fraud_data.csv

Here is your fraud transactions dataset in tabular format:

| Transaction ID | Amount ($) | Merchant Category | Time (Hour) | Location | Previous Fraud | Fraud (0/1) |

|---|---|---|---|---|---|---|

| 1 | 20 | Grocery Store | 10 | New York | 0 | 0 |

| 2 | 5000 | Electronics | 23 | Miami | 1 | 1 |

| 3 | 75 | Restaurants | 20 | Chicago | 0 | 0 |

| 4 | 200 | Online Shopping | 2 | LA | 0 | 1 |

| 5 | 1500 | Jewelry | 22 | Vegas | 1 | 1 |

| 6 | 10 | Coffee Shop | 9 | Boston | 0 | 0 |

| 7 | 350 | Travel Booking | 5 | Dallas | 0 | 0 |

| 8 | 4500 | Electronics | 1 | NYC | 1 | 1 |

| 9 | 95 | Gas Station | 14 | SF | 0 | 0 |

| 10 | 2200 | Jewelry | 23 | Miami | 1 | 1 |

| 11 | 45 | Clothing | 12 | Seattle | 0 | 0 |

| 12 | 3200 | Electronics | 3 | Austin | 1 | 1 |

| 13 | 80 | Entertainment | 18 | Denver | 0 | 0 |

| 14 | 180 | Pharmacy | 11 | Houston | 0 | 0 |

| 15 | 2500 | Luxury | 22 | NYC | 1 | 1 |

| 16 | 30 | Grocery Store | 15 | New York | 0 | 0 |

| 17 | 500 | Online Shopping | 7 | San Diego | 0 | 0 |

| 18 | 4000 | Electronics | 4 | LA | 1 | 1 |

| 19 | 60 | Restaurants | 19 | Boston | 0 | 0 |

| 20 | 2900 | Jewelry | 23 | Vegas | 1 | 1 |

🔹 Python Code for GBM Model with Manual Input

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import accuracy_score, classification_report

# Step 1: Load Dataset

data = pd.read_csv("fraud_data.csv")

# Step 2: Define Features (X) and Target Variable (y)

X = data[['Amount ($)', 'Time (Hour)', 'Previous Fraud']]

y = data['Fraud (0/1)']

# Step 3: Split into Training & Testing Data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Step 4: Train GBM Model

gbm = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, max_depth=3, random_state=42)

gbm.fit(X_train, y_train)

# Step 5: Model Accuracy

accuracy = accuracy_score(y_test, gbm.predict(X_test))

print(f"Model Accuracy: {accuracy:.2f}")

# Step 6: User Manual Input for Fraud Detection

print("\nEnter transaction details to check if it's fraudulent:")

amount = float(input("Enter transaction amount ($): "))

time = int(input("Enter transaction time (hour of the day, 0-23): "))

previous_fraud = int(input("Was the user previously involved in fraud? (1=Yes, 0=No): "))

# Create DataFrame for prediction

input_data = pd.DataFrame([[amount, time, previous_fraud]], columns=['Amount ($)', 'Time (Hour)', 'Previous Fraud'])

# Predict Fraud Probability

fraud_prediction = gbm.predict(input_data)[0]

fraud_probability = gbm.predict_proba(input_data)[0][1]

# Display Result

if fraud_prediction == 1:

print(f"\n⚠️ ALERT: This transaction is likely **FRAUDULENT** with {fraud_probability*100:.2f}% probability!")

else:

print(f"\n✅ This transaction is likely **SAFE**, with a fraud probability of {fraud_probability*100:.2f}%.")

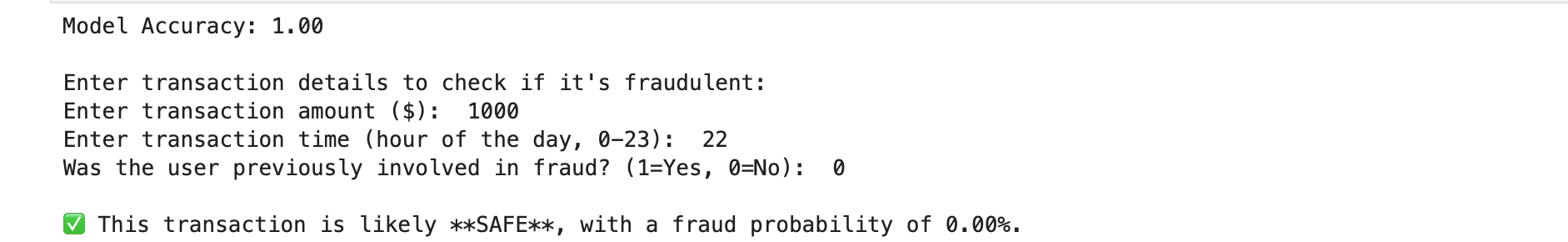

My input & output:

The transaction I checked manually was 'safe'.

Step-by-Step Explanation of the Fraud Detection Model Using GBM:

🛠 Step 1: Load Dataset

data = pd.read_csv("fraud_data.csv")

- Reads the dataset (

fraud_data.csv) into a Pandas DataFrame.

📊 Step 2: Define Features (X) and Target (y)

X = data[['Amount ($)', 'Time (Hour)', 'Previous Fraud']]

y = data['Fraud (0/1)']

- X (Features): Transaction Amount, Time of Transaction, and Previous Fraud History.

- y (Target): Whether the transaction is fraudulent (

1) or safe (0).

✂️ Step 3: Split Data into Training & Testing Sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

- 80% Data → Used for Training

- 20% Data → Used for Testing

random_state=42ensures consistent results each time.

🤖 Step 4: Train the Gradient Boosting Model

gbm = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, max_depth=3, random_state=42)

gbm.fit(X_train, y_train)

- Creates a GBM model with:

100decision trees (n_estimators=100).- A learning rate of

0.1(how much the model adjusts at each step). - Max tree depth of

3to prevent overfitting.

- The model learns fraud patterns from training data.

📈 Step 5: Check Model Accuracy

accuracy = accuracy_score(y_test, gbm.predict(X_test))

print(f"Model Accuracy: {accuracy:.2f}")

- Compares predictions on test data with actual fraud labels.

- Prints the accuracy of the model.

📝 Step 6: User Input for Fraud Detection

amount = float(input("Enter transaction amount ($): "))

time = int(input("Enter transaction time (hour of the day, 0-23): "))

previous_fraud = int(input("Was the user previously involved in fraud? (1=Yes, 0=No): "))

- Takes user input for a new transaction.

- Asks for:

- Transaction Amount ($)

- Time of transaction (0-23 hours)

- Whether the user had previous fraud history (

1=Yes, 0=No).

🔍 Step 7: Create DataFrame for Prediction

input_data = pd.DataFrame([[amount, time, previous_fraud]], columns=['Amount ($)', 'Time (Hour)', 'Previous Fraud'])

- Converts the user input into a DataFrame, matching the format of the training data.

⚠️ Step 8: Predict Fraud Probability

fraud_prediction = gbm.predict(input_data)[0]

fraud_probability = gbm.predict_proba(input_data)[0][1]

- Predicts whether the transaction is fraud (

1) or safe (0). - Calculates the probability of fraud.

🚨 Step 9: Display the Result

if fraud_prediction == 1:

print(f"\n⚠️ ALERT: This transaction is likely **FRAUDULENT** with {fraud_probability*100:.2f}% probability!")

else:

print(f"\n✅ This transaction is likely **SAFE**, with a fraud probability of {fraud_probability*100:.2f}%.")

- If

1(fraud detected) → Shows an ALERT with fraud probability. - If

0(safe transaction) → Shows SAFE with fraud probability.

💡 Nutshell

Gradient Boosting Machines (GBM) are powerful, accurate, and widely used in real-world applications. Whether predicting fraudulent transactions, diagnosing diseases, or forecasting stock prices, GBM remains one of the top choices for structured data problems.

✅ GBM learns step-by-step, improving at each stage.

✅ It is widely used in banking, healthcare, e-commerce, and cybersecurity.

✅ It is one of the best algorithms for structured data analysis.

✅ It is highly customizable and requires hyperparameter tuning.

📊 Supervised Learning Algorithms Comparison

| Algorithm | Type | How It Works | Best Used When | Modern Use Cases |

|---|---|---|---|---|

| Linear Regression | Regression | Fits a straight line to minimize error between predicted and actual values. | You need a simple, interpretable model for predicting continuous values. | Predicting prices, forecasting sales, risk scoring. |

| Logistic Regression | Classification | Estimates probability using the logistic function to classify outcomes. | You’re classifying binary or multi-class targets with linear boundaries. | Spam detection, churn prediction, disease diagnosis. |

| Decision Tree | Both | Splits data into branches based on decision rules (if-else) for prediction. | You want interpretable rules and can handle non-linear data. | Loan approval, fraud detection, rule-based workflows. |

| Support Vector Machine (SVM) | Classification | Finds the best boundary (hyperplane) that separates classes with the widest margin. | You need high accuracy and clear margins between classes (even in small datasets). | Image classification, face detection, bioinformatics. |

| K-Nearest Neighbors (KNN) | Both | Classifies based on the majority class among the K nearest neighbors. | You want simplicity and don’t need a model explanation (great for cold-start problems). | Recommender systems, customer segmentation, personalization. |

| Naive Bayes | Classification | Applies Bayes' Theorem assuming independence between features. | You have categorical data and want fast, baseline classification. | Text classification, email filtering, sentiment analysis. |

| Random Forest | Both | Builds multiple decision trees and averages their predictions (ensemble learning). | You need high performance, non-linear classification/regression with feature importance. | Credit scoring, e-commerce ranking, fraud detection. |

| Gradient Boosting (GBM) | Both | Builds trees sequentially where each tree corrects the errors of the previous ones. | You want top performance and can handle slower training time and hyperparameter tuning. | Click-through rate prediction, stock trend prediction, customer lifetime value estimation. |

When to Use Which Algorithm?

- Regression Problems:

- Use Linear Regression for interpretable, linear relationships.

- Use Random Forest/GBM for non-linear, high-accuracy needs.

- Classification Problems:

- Use Logistic Regression for binary/multi-class linear problems.

- Use SVM for small-to-medium datasets with clear margins.

- Use Naive Bayes for text/NLP tasks (fast but simplistic).

- Use Random Forest/GBM for tabular data with complex patterns.

- Modern Trends:

- GBM variants (XGBoost, LightGBM, CatBoost) dominate structured data competitions.

- Hybrid models (e.g., RF + SVM) are used in healthcare/biology.

- Deep Learning (CNNs/RNNs) replaces traditional methods for image/text/sequential data.

💬 Join the DecodeAI WhatsApp Channel for regular AI updates → Click here