[Day 36] Reinforcement Learning Type 9 – Monte Carlo Tree Search (MCTS) (with a Practical Python Project)

MCTS doesn't guess - it simulates, evaluates, and conquers, just like a grandmaster plotting 10 moves ahead.

![[Day 36] Reinforcement Learning Type 9 – Monte Carlo Tree Search (MCTS) (with a Practical Python Project)](/content/images/size/w2000/2025/04/MCTS2.svg)

Fun fact: AlphaGo, the first AI to beat a Go world champion, combined Monte Carlo Tree Search with deep neural networks.

🎯 What is Monte Carlo Tree Search (MCTS)?

Monte Carlo Tree Search (MCTS) is a powerful decision-making algorithm often used in reinforcement learning and game-playing AI. It’s like a strategic planner that explores possible future scenarios to make the best choice, balancing exploration (trying new ideas) and exploitation (using what’s already known to work). MCTS builds a search tree by simulating many possible outcomes, evaluating them, and then picking the most promising path.

It explores the space of possible actions like a strategist:

- 📈 Simulates multiple future paths (Monte Carlo Rollouts)

- 🌳 Builds a decision tree incrementally

- 🔄 Chooses actions that balance exploration and exploitation

Imagine you’re planning a road trip with friends. You’re at a junction with multiple routes to your destination, and you need to decide which path to take. MCTS is like a GPS that doesn’t just look at the map—it simulates thousands of trips down each route, considering factors like traffic, road conditions, and scenic views. For each route, it plays out the journey multiple times (using random simulations), scores the outcomes (e.g., fastest route gets +10, scenic route gets +5), and builds a decision tree to guide you. Over time, it focuses on the most promising routes while still occasionally exploring others to ensure it doesn’t miss a hidden gem.

MCTS operates in four key steps:

- Selection: Start at the root of the tree (current state) and choose a promising path based on past simulations, using a formula like UCT (Upper Confidence Bound for Trees) to balance exploration and exploitation.

- Expansion: Add a new node (possible action) to the tree if the path hasn’t been fully explored.

- Simulation: From the new node, simulate a random playout to the end (e.g., finish the game or reach a goal), estimating the outcome.

- Backpropagation: Update the tree by propagating the simulation’s result (reward) back up the path, improving the decision-making for future choices.

Example 1: Chess Grandmaster AI

Picture a chess AI like AlphaZero. At each move, MCTS simulates thousands of possible games from the current position. It explores moves like “move pawn forward” or “capture with knight,” plays out random games to the end, and scores the outcomes (win = +1, loss = -1). Over many simulations, it builds a tree of moves, focusing on the most promising strategies while still exploring alternatives to avoid tunnel vision.

Example 2: Vacation Planner App

Imagine a vacation planning app that helps you decide what to do each day of your trip. Should you visit the museum, go hiking, or relax at the beach? MCTS simulates different itineraries, considering factors like weather, travel time, and your preferences. It might simulate 1,000 possible 5-day plans, scoring each based on enjoyment (+10 for a sunny hike, -5 for a rainy museum day), and then recommend the best schedule.

👨💻 Real-World Use Case 1: Supply Chain Optimization

Imagine you’re managing a global supply chain for a retail company like Walmart. You need to decide how to route shipments from factories to stores, considering factors like shipping costs, delivery times, and warehouse capacities. The goal is to minimize costs while ensuring products arrive on time.

MCTS in Action: MCTS builds a decision tree of possible shipping routes. It starts with the current state (e.g., shipment at Factory A) and explores actions like “ship via air to Warehouse B” or “ship via sea to Port C.” For each action, it simulates the rest of the delivery process thousands of times, accounting for variables like delays or fuel costs, and scores the outcomes (e.g., on-time delivery = +20, delayed = -10). Over time, it identifies the most cost-effective and reliable route.

👨💻 Real-World Use Case 3: Autonomous Vehicle Path Planning

Imagine an autonomous vehicle navigating a busy city. The car needs to decide its next move at an intersection: turn left, go straight, or wait for pedestrians. The goal is to reach the destination quickly while avoiding collisions and obeying traffic rules.

MCTS in Action: MCTS builds a tree of possible driving decisions. From the current intersection (root node), it explores actions like “turn left.” It simulates the outcome: turning left might lead to a clear road (+5) or a pedestrian crossing (-10). It runs thousands of simulations, considering variables like traffic lights, other vehicles, and road conditions, and scores each path. Over time, it identifies the safest and fastest route.

♟️ Python Project : Chess AI

🎯 About the Project

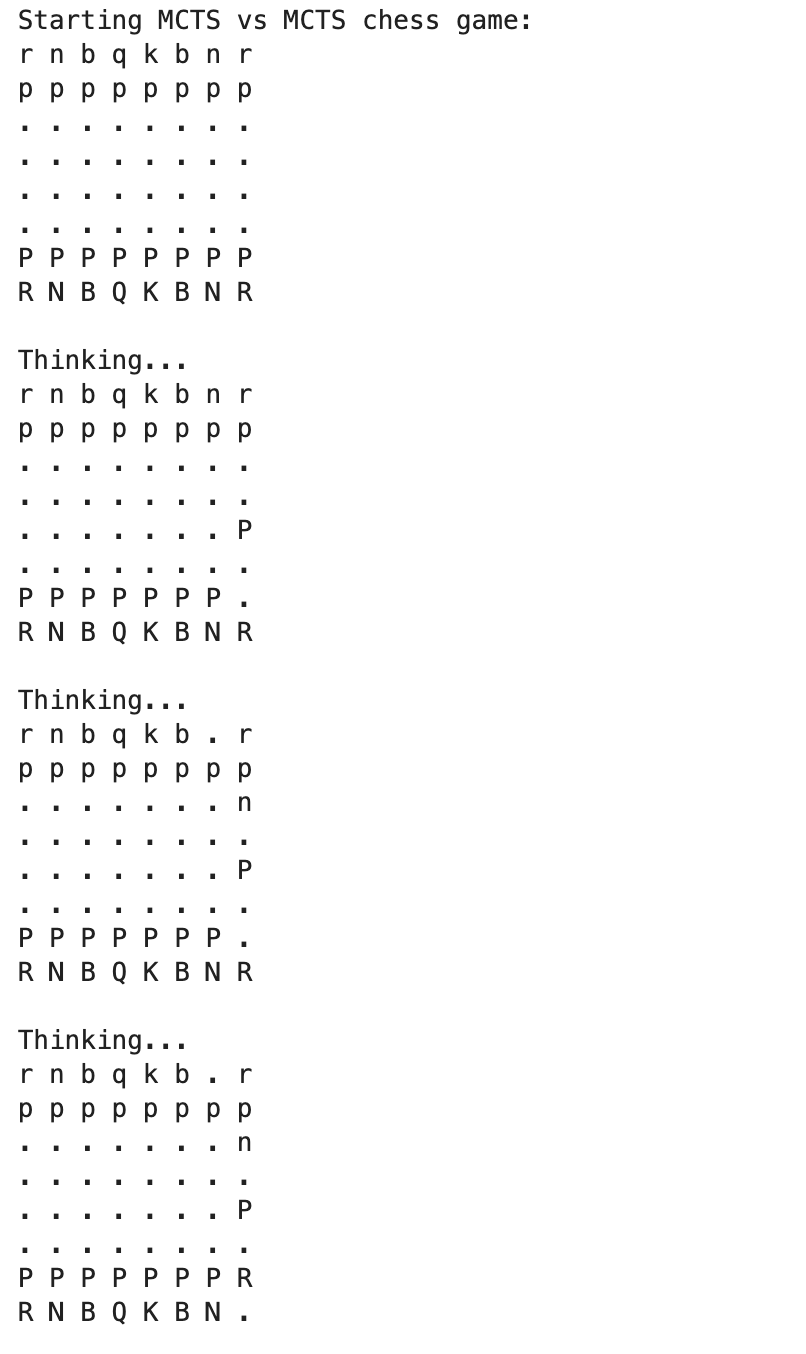

The AI plays against itself using MCTS logic until the game ends.

This project implements a Monte Carlo Tree Search (MCTS) agent to play chess using the python-chess library. It simulates multiple random games from each possible move, evaluates the outcomes, and chooses the move that leads to the best win probability.

🐍 Python Code

import chess

import chess.engine

import random

import numpy as np

class MCTSNode:

def __init__(self, board, parent=None, move=None):

self.board = board

self.parent = parent

self.move = move

self.children = []

self.visits = 0

self.wins = 0

def expand(self):

legal_moves = list(self.board.legal_moves)

for move in legal_moves:

new_board = self.board.copy()

new_board.push(move)

self.children.append(MCTSNode(new_board, self, move))

def best_child(self, c_param=1.4):

choices_weights = [

(child.wins / (child.visits + 1e-4)) + c_param * np.sqrt(np.log(self.visits + 1) / (child.visits + 1e-4))

for child in self.children

]

return self.children[np.argmax(choices_weights)]

def simulate(self):

sim_board = self.board.copy()

while not sim_board.is_game_over():

legal_moves = list(sim_board.legal_moves)

sim_board.push(random.choice(legal_moves))

outcome = sim_board.outcome()

if outcome.winner is None:

return 0.5

return 1 if outcome.winner == self.board.turn else 0

def backpropagate(self, result):

self.visits += 1

self.wins += result

if self.parent:

self.parent.backpropagate(1 - result) # invert result for opponent

def mcts(board, simulations=100):

root = MCTSNode(board)

root.expand()

for _ in range(simulations):

node = root

while node.children:

node = node.best_child()

if node.visits > 0 and not node.board.is_game_over():

node.expand()

if node.children:

node = random.choice(node.children)

result = node.simulate()

node.backpropagate(result)

return max(root.children, key=lambda c: c.visits).move

# Run a basic game against itself

board = chess.Board()

print("Starting MCTS vs MCTS chess game:")

while not board.is_game_over():

print(board)

print("\nThinking...")

move = mcts(board, simulations=200)

board.push(move)

print("\nFinal board:")

print(board)

print("Game over:", board.outcome())Result:

AI will play against AI until one defeats other.

This is really cool if you see the AI playing against each other.

Run it on Jupyter Notebook and experience it.

💬 Join the DecodeAI WhatsApp Channel for regular AI updates → Click here