[Day 8] Types of Large Language Models (LLMs)

From GPT to Gemini, LLMs come in many forms—text wizards, code companions, image interpreters, and multilingual maestros. Meet the AI family tree.

![[Day 8] Types of Large Language Models (LLMs)](/content/images/size/w2000/2025/03/_--visual-selection--10-.svg)

It's tricky to give a definitive "types of LLMs" classification because the field is rapidly evolving, and models can be categorized in multiple ways. You try asking this question of ChatGPT, Claude, or Gemini and you will get different answers.

However, here are some common ways to distinguish between different LLMs:

Large Language Models (LLMs) as we now know, are advanced AI systems capable of understanding, processing, and generating human-like text. These models vary in their architecture, purpose, training methodology, and input/output modalities. Below is a detailed exploration of the different types of LLMs, enriched with insights from multiple perspectives.

1. General-Purpose LLMs

General-purpose LLMs are trained on diverse and large datasets to handle a wide array of tasks. They excel in generating text, answering questions, summarizing content, and more. These models are versatile and serve as the backbone of many AI applications.

Examples:

- GPT Series (OpenAI): Known for creative writing, coding assistance, and general conversational tasks.

- Claude (Anthropic): Focuses on nuanced reasoning and ethical AI responses.

- LLaMA (Meta): Efficient and open-source foundation model optimized for research and practical applications.

Key Features:

- Broad applicability across domains.

- Often serve as the base for fine-tuning into more specialized models.

2. Domain-Specific LLMs

These models are tailored for particular industries or tasks, such as healthcare, legal services, or coding. They are usually fine-tuned from general-purpose models to excel in specific domains.

Examples:

- Medical LLMs (e.g., MedPaLM by Google): Trained on medical literature for diagnosis support and clinical research.

- Legal LLMs: Specialized in legal documents and case law, aiding in tasks like contract analysis and legal research.

- Code LLMs (e.g., Codex by OpenAI, GitHub Copilot): Focused on generating and debugging code, trained on repositories like GitHub.

Key Features:

- Deep understanding of industry-specific jargon and requirements.

- Highly accurate for specialized tasks but less versatile.

3. Instruction-Tuned LLMs

Instruction-tuned LLMs are trained to follow specific instructions provided by the user. These models excel in completing tasks with higher accuracy and alignment with user intent.

Examples:

- Flan-T5 (Google): Optimized for instruction-based tasks like summarization and translation.

- InstructGPT (OpenAI): Designed to better understand user instructions and align responses with human values.

Key Features:

- Focused on task completion based on explicit user prompts.

- Improved alignment with user needs and expectations.

4. Multilingual LLMs

Multilingual models are capable of understanding and generating content in multiple languages. They are essential for applications requiring cross-lingual understanding, translation, and global communication.

Examples:

- BLOOM: Supports over 46 languages, with a focus on non-English languages.

- XLM-R: Strong in cross-lingual understanding and multilingual classification tasks.

Key Features:

- Enable communication across language barriers.

- Often used in translation services and global applications.

5. Multimodal LLMs

Multimodal models process and integrate multiple types of data, such as text, images, audio, and video, making them highly versatile for diverse applications.

Examples:

- GPT-4V (OpenAI): Can process both text and images, making it useful for visual reasoning tasks.

- Gemini (Google): Handles text, images, audio, and video for complex multimodal interactions.

- CLIP (OpenAI): Combines image and text understanding, enabling tasks like image captioning and search.

Key Features:

- Seamlessly integrate different data types.

- Used in advanced applications like visual Q&A, image captioning, and creative design.

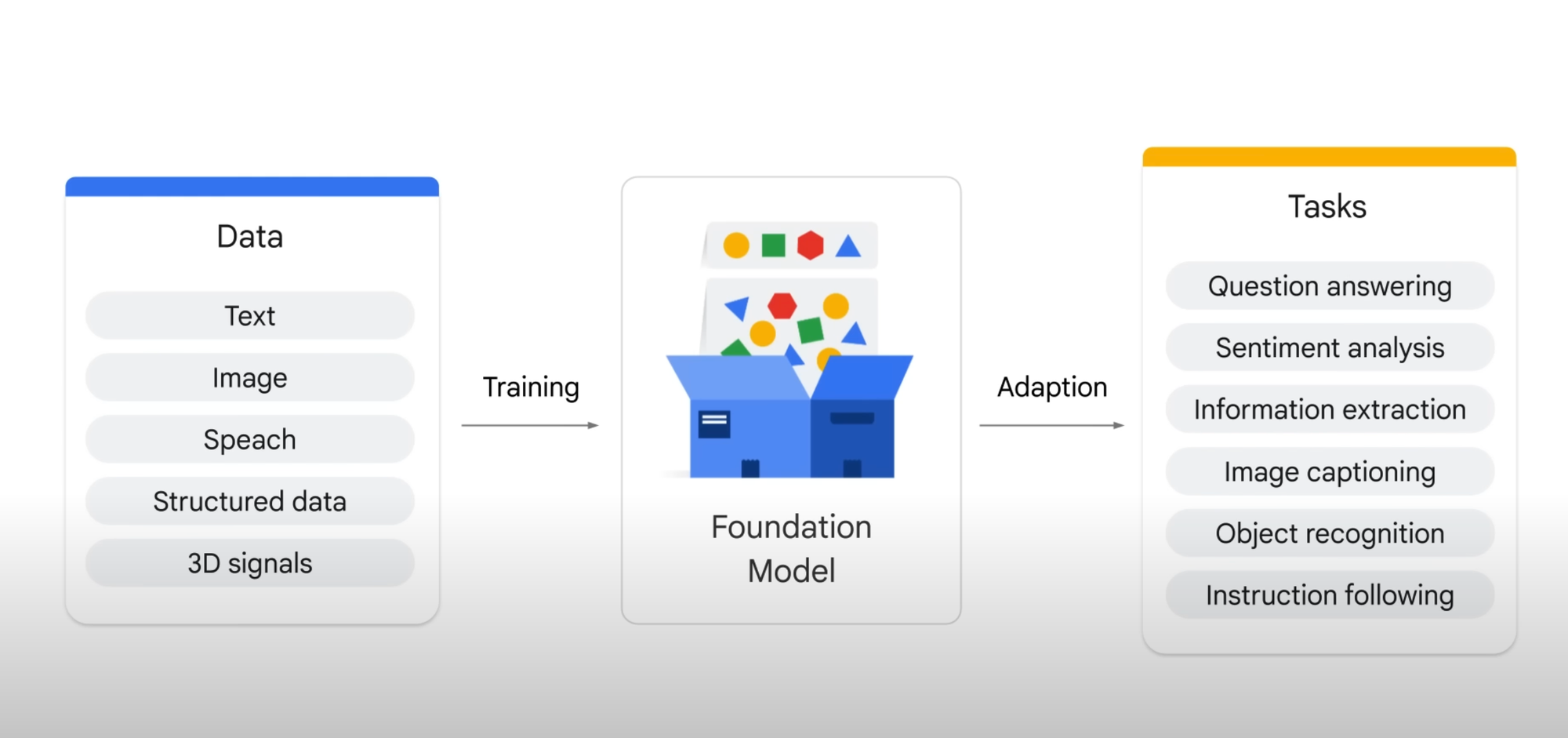

6. Foundation Models

Foundation models are massive LLMs trained on broad datasets to provide general-purpose language understanding and generation capabilities. These models are often used as a base for fine-tuning into more specialized LLMs.

Examples:

- PaLM (Google): General-purpose model with applications ranging from conversation to code generation.

- GPT-4 (OpenAI): Known for its versatility and high-quality outputs.

Key Features:

- Serve as the starting point for creating specialized models.

- Require extensive computational resources to train.

7. Open-Source vs. Proprietary LLMs

Open-Source Models:

These models are publicly available for use, modification, and distribution, fostering innovation and transparency in the AI community.

- Examples: LLaMA 2 (Meta), Falcon (TII), BLOOM.

Proprietary Models:

These are owned by companies and are typically closed-source, with access governed by licensing agreements.

- Examples: GPT-4 (OpenAI), Claude (Anthropic), Gemini (Google).

Key Features:

- Open-Source: Promotes collaboration and customization.

- Proprietary: Offers advanced features but is restricted by licensing and cost.

8. By Architecture

Autoregressive Models:

- Examples: GPT series, Turing-NLG.

- Features: Predict the next word/token in a sequence, making them ideal for text generation.

Autoencoder Models:

- Examples: BERT, RoBERTa.

- Features: Focus on understanding context by masking parts of the input and predicting them.

Sequence-to-Sequence Models:

- Examples: T5, mT5.

- Features: Process input sequences and generate output sequences, useful for tasks like translation and summarization.

Architecture used: Most LLMs, like GPT series, BERT, and T5, use the Transformer architecture due to its efficiency in parallelizing computations and capturing long-range dependencies. Few older models doesn't use it because before the Transformer revolution, architectures like Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks were used. However, these are largely outdated in the context of LLMs.

Nutshell

LLMs can be classified into various types based on their architecture, purpose, and functionality. From general-purpose models like GPT to specialized ones like MedPaLM, and multimodal systems like GPT-4V, these categories highlight the diverse applications and capabilities of LLMs. As AI technology continues to evolve, new types and applications of LLMs are emerging, making them indispensable tools across industries.

💬 Join the DecodeAI WhatsApp Channel for regular AI updates → Click here