What is MCP [Model Context Protocol] and Why?

![What is MCP [Model Context Protocol] and Why?](/content/images/size/w2000/2025/06/MCP2-3.gif)

Modern LLMs (like ChatGPT, Grok, etc.) are impressive; however, they aren't very useful in the real world unless they have access to more information than just their training data—and can actually do something with it. AI models are often trained on vast but static datasets with a "knowledge cutoff" date.

Retrieval-Augmented Generation (RAG) has been a popular attempt to solve this problem. It addresses the need to access current data from the web or information from a specific document you’ve uploaded. But it still doesn’t have access to your specific private data (like your company’s internal knowledge base, your real-time calendar, or the number of leave days you have left).

RAG can only retrieve details and show them to you—it can’t act on them, like applying for leave on your behalf.

While RAG is useful, a new term is gaining attention—MCP. Every post online talks about “how MCP works,” but the real question is: why should we care?

Model Context Protocol (MCP) is a method for giving AI models the context they need—allowing them to access real-time information and take action in other apps. It’s this ability that enables AI tools to generate useful content, deliver meaningful insights, and perform tasks that actually move work forward.

We will cover:

1: What is Model Context Protocol (MCP)

2: What problem is MCP solving?

3: MCP's Goal

4: MCP's Architecture and How does it work?

5: MCP & AI agents

1: What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open, standardized protocol proposed by Anthropic (the makers of Claude), designed to safely and securely connect AI tools to external systems—like your company’s CRM, Slack, or deployment server.

This means your AI assistant can access relevant data and trigger actions in those tools—such as updating a record, sending a message, or kicking off a deployment. By giving AI assistants the ability to both understand and act, MCP enables more useful, context-aware, and proactive AI experiences.

2: What problem is MCP solving?

AI tools would be far more useful if they could not only access data but also take action based on it.

For general questions, an LLM's training data might be enough. But if you want an AI to tell you how your company's sales compare to last quarter, analyze competitor strategies, send a report, create a task in your project management tool, update a record in your CRM, or notify your team on Slack—you need a way for it to interact with those apps.

MCP makes that possible by giving AI tools a standardized way to discover and invoke actions in external systems. It bridges the gap between understanding and execution, so AI isn't just offering insights—it's actively getting things done.

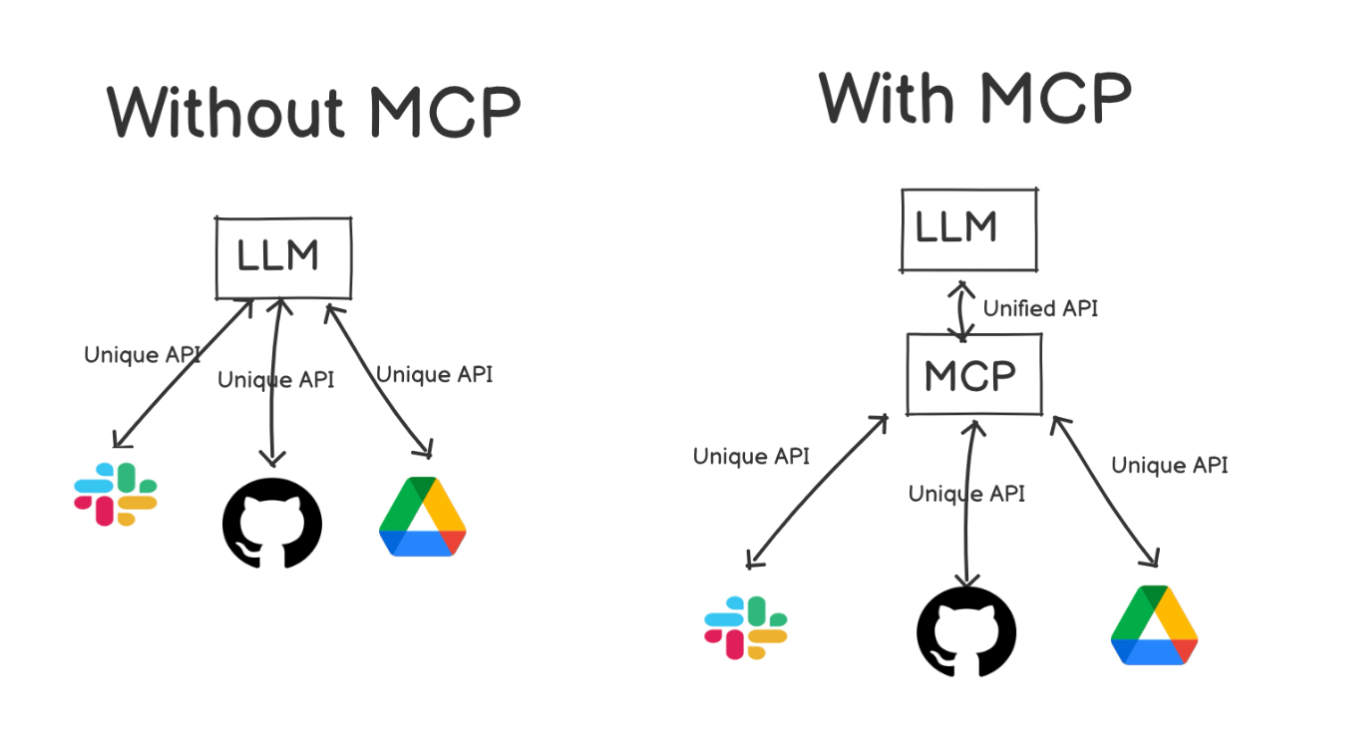

Another major problem it solves: Instead of building custom integrations for every service, MCP defines a standard for how these services should interoperate—how requests are structured, what actions are available, and how they can be discovered. It acts as a two-way communication bridge between AI models and the outside world (tools, apps, and data sources not part of the model’s training data).

This lets developers create secure, consistent, and reusable integrations between AI assistants and external systems.

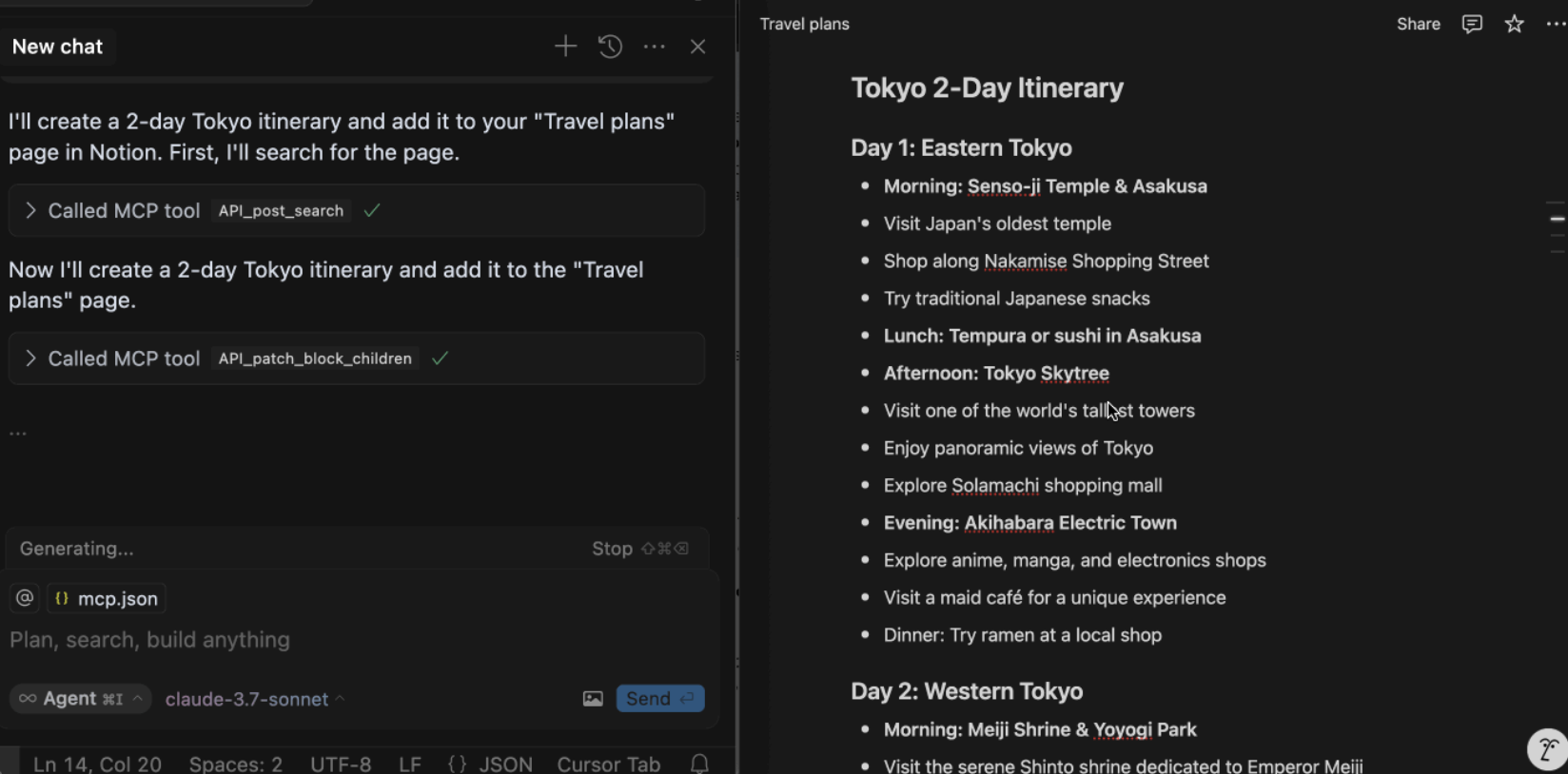

For example, using an MCP implementation, a user could ask an AI to create a travel itinerary directly in Notion. It will do by just proving the prompt.

That means AI tools are no longer limited to answering questions—they can perform actions like sending emails, creating tasks, or updating records, based on the capabilities each app exposes through MCP.

Previously, doing this would require either:

- Building custom integrations per app, or

- Using an iPaaS platform (like Zapier or Make) that already had prebuilt integrations.

MCP changes that. It provides a standardized blueprint for how AI tools can interact with any data source. Any app that supports MCP can expose a structured set of tools or actions that AI assistants and agents can understand and use. So when you ask an AI to do something, it can check what’s available and take the appropriate action—giving you much more flexibility.

It also simplifies switching tools. For example, if two different file storage apps implement MCP, moving from Google Drive to Dropbox might require changing just a few lines of configuration—instead of rewriting the entire integration.

Isn't This Already Done by OpenAI or Others?

Not quite. Features like OpenAI’s function calling or Anthropic’s tool use are tied to their own ecosystems. Each provider has its own way of defining tools, formatting requests, and managing interactions.

If you switch AI providers, you typically have to rebuild your integration layer to match the new provider's system. This creates vendor lock-in.

MCP solves that by offering a vendor-neutral standard—so your tools can work across different AI platforms without needing to be rebuilt from scratch.

🟡 As of now, only Claude (Anthropic) officially supports Model Context Protocol (MCP) natively.

Also read: The Brains Behind Smarter AI: RAG Meets MCP

3: MCP's goal

Standardization & Interoperability: MCP aims to become a universal standard, independent of any single AI provider.

Anthropic maintains a list of available MCP servers, or if you're a developer, you can write your own.

Its ambitions include:

- Server-side reusability: A company like Salesforce could build one MCP server exposing actions (e.g.,

fetch_contact_record). Any AI client—whether using OpenAI, Anthropic, Cohere, etc.—could access it. - Client-side flexibility: App developers using an MCP client can connect to a variety of tools offering MCP servers. Crucially, they can also swap out the underlying AI provider without reworking all the tool integrations—assuming the new provider supports the MCP client role.

- Decoupling & ecosystem: MCP decouples the tool from the AI provider. This opens up a broader ecosystem where tool providers offer standard MCP endpoints, and AI developers consume them using standardized MCP clients—instead of relying on provider-specific integrations.

While today’s custom solutions may seem just as complex, MCP’s long-term value lies in reusability, vendor-neutral integrations, and a more open ecosystem.

A simple analogy: HTTP enables browsers and apps to interact with any website via a common protocol. MCP aims to be HTTP for AI—a shared language for AI-to-tool interoperability.

a way for the chatbot to interact with and control other software on your computer

- Wyatt Barnett

4: MCP's Architecture and How it Works:

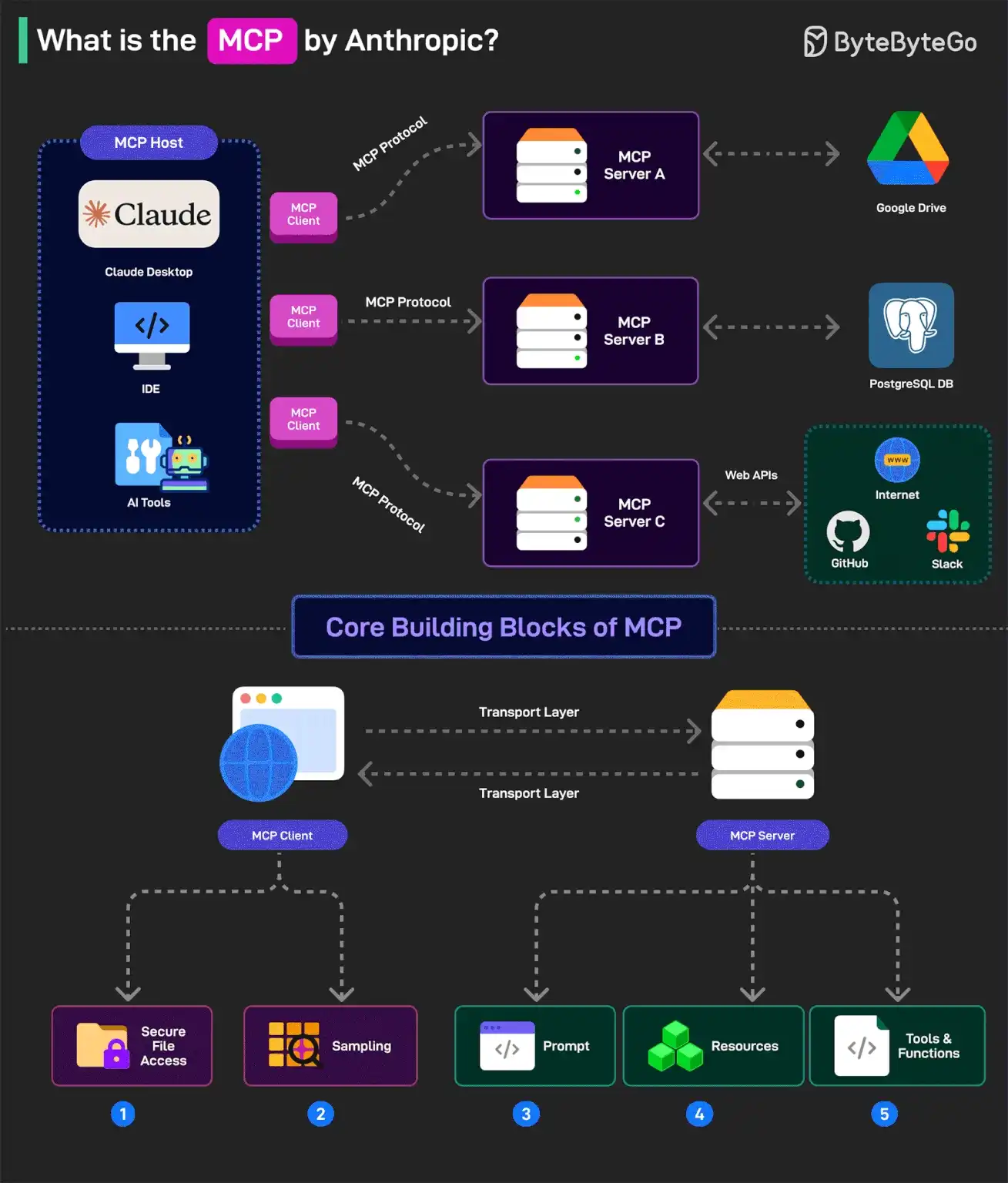

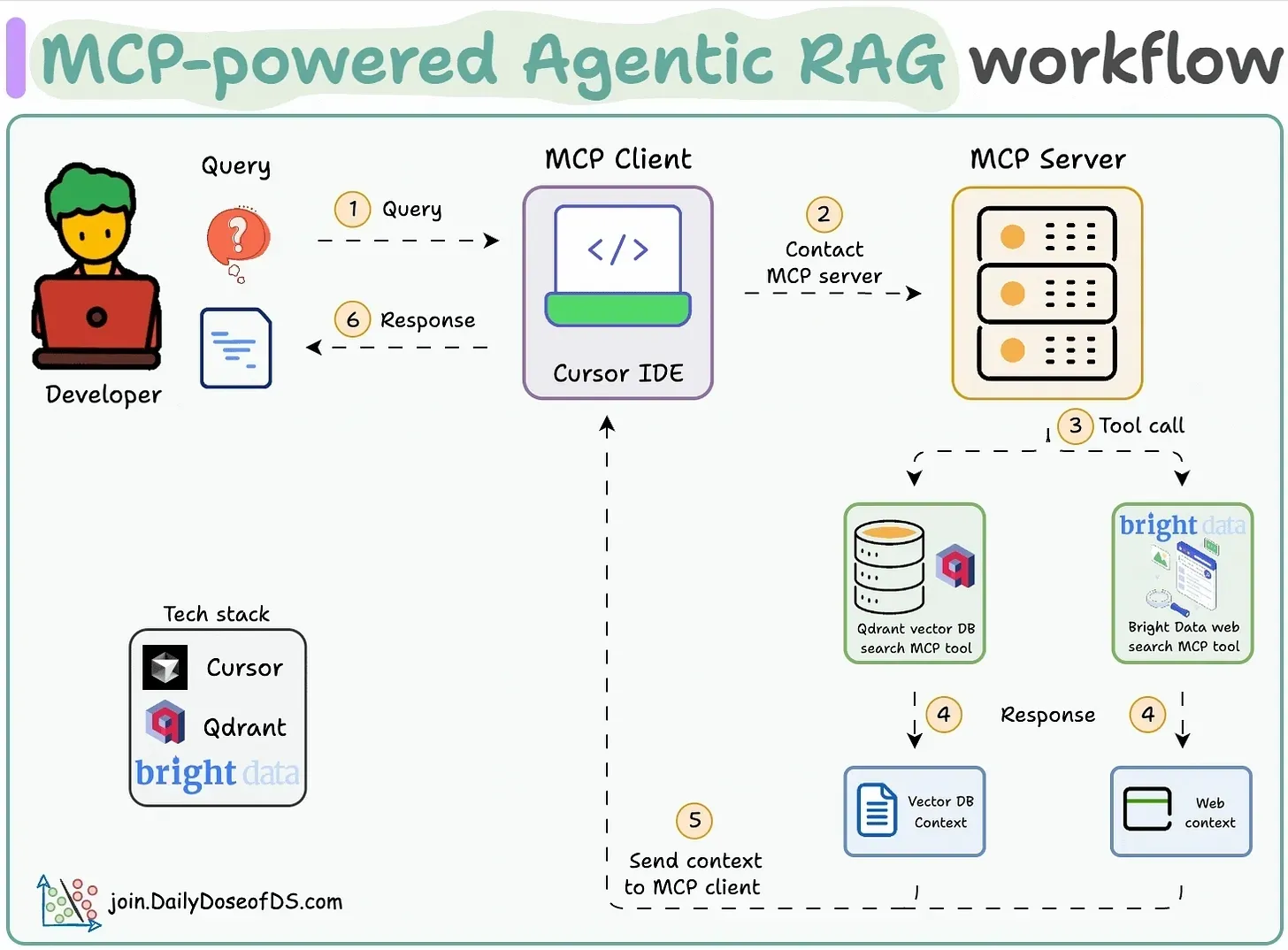

MCP uses a client-host-server model:

- MCP Host: Usually the chatbot, IDE, or AI app. It manages clients, permissions, and security. The host may trigger MCP requests based on user input or autonomously.

- MCP Client: Created by the host, it connects to a single server and facilitates communication between the host and server.

- MCP Server: Connects to a tool or data source (local or remote) and exposes specific capabilities.

Examples:- A file storage server might expose “search for a file” or “read a file.”

- A team chat server might expose “get mentions” or “update status.”

MCP Servers can provide data in three ways:

- Prompts – Predefined templates for LLMs (selectable via slash commands, menus, etc.)

- Resources – Structured data (e.g., files, databases, commit history) that give context

- Tools – Functions that let the model perform actions (e.g., API calls, file updates)

While similar to APIs, MCP is more flexible. It wraps traditional APIs inside a unified protocol, acting as a bridge between AI models and external systems—without hardcoding service-specific logic.

5: MCP & AI agents

AI agents are autonomous, AI-driven tools that can make decisions and take action on their own. MCP helps enable this behavior by letting developers connect AI assistants to apps and data sources—making it possible to build AI tools that act independently across different systems. However, using MCP doesn’t automatically turn any AI tool into an agent, and it’s not the only way to build one. MCP is simply a method for linking AIs to other tools.

How MCP Powers AI Agents Today: MCP enables AI agents to interact with real-world tools—like CRMs, databases, or ticketing systems—by providing a standardized way to connect. Developers use MCP to build agents that can not only access data but also take action, such as sending emails, updating records, or triggering workflows. This unlocks true autonomy, where AI can reason, decide, and execute tasks across connected systems—securely and reliably.

MCP is best suited for developers building custom AI integrations. It’s ideal for technical teams who want precise control and flexibility when adding AI to their apps or workflows. For those without a coding background—or who want a quicker, simpler setup—no-code platforms like Zapier Agents offer an alternative. These tools let you build custom agents that work with 7,000+ apps, no programming or infrastructure needed.

Both paths serve different needs: MCP is powerful for custom, developer-driven solutions, while no-code tools prioritize ease of use and fast deployment for everyone else.

MCP Server: Wrapping APIs & Hosting Explained

- API Wrapping: Companies can support MCP by wrapping their existing APIs inside an MCP Server, which translates standard MCP requests into internal API calls and formats the responses accordingly.

- Where It’s Hosted:

- On Provider’s Cloud: Most commonly, companies host the MCP server in their cloud (AWS, Azure, etc.).

- On-Premises: For internal tools, it may run on a private network.

- Locally (Dev Use): Developers might run it locally for testing, but this isn’t typical for production.

Bottom Line: The MCP server lives where the data/tool provider chooses—usually in their own infrastructure.

Summing it up

Just like USB lets you connect different devices to your computer without needing to understand each one, MCP lets AI assistants connect to various services without custom setup. You plug in an MCP “connector,” and the AI instantly knows what tools are available and how to use them.

MCP is a standard aimed at streamlining how AI models connect to external tools and data sources. While it doesn't introduce brand-new capabilities, it provides a cleaner, more consistent, and vendor-neutral approach to building those connections.

For developers, MCP helps by:

- Reducing integration complexity

- Avoiding platform lock-in

- Making it easier to build AI systems that stay current and context-aware

For users, this translates into AI that feels smarter, more relevant, and better connected—without needing to know how it all works behind the curtain.

It’s still early days, but MCP is already gaining strong traction in the AI ecosystem.

I hope, It'd clear now.

Akshay

Linkedin

Reference:

- Bens Bites

- Zapier

- modelcontextprotocol.io

- ByteByteGo